The most important function defined in this module is notebooks2script, so you may want to jump to it before scrolling though the rest, which explain the details behind the scenes of the conversion from notebooks to library. The main things to remember are:

- put

# exporton each cell you want exported - put

# exportson each cell you want exported with the source code shown in the docs - put

# exportion each cell you want exported without it being added to__all__, and without it showing up in the docs. - one cell should contain

# default_expfollowed by the name of the module (with points for submodules and without the py extension) everything should be exported in (if one specific cell needs to be exported in a different module, just indicate it after#export:#export special.module) - all left members of an equality, functions and classes will be exported and variables that are not private will be put in the

__all__automatically - to add something to

__all__if it's not picked automatically, write an exported cell with something like#add2all "my_name"

Examples of export

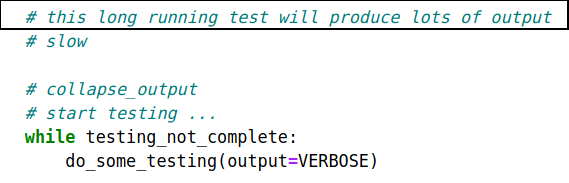

See these examples on different ways to use #export to export code in notebooks to modules. These include:

- How to specify a default for exporting cells

- How to hide code and not export it at all

- How to export different cells to specific modules

For bootstrapping nbdev we have a few basic foundations defined in imports, which we test a show here. First, a simple config file class, Config that read the content of your settings.ini file and make it accessible:

create_config("github", "nbdev", user='fastai', path='..', tst_flags='tst', cfg_name='test_settings.ini', recursive='False')

cfg = get_config(cfg_name='test_settings.ini')

test_eq(cfg.lib_name, 'nbdev')

test_eq(cfg.git_url, "https://github.com/fastai/nbdev/tree/master/")

test_eq(cfg.path("lib_path"), Path.cwd().parent/'nbdev')

test_eq(cfg.path("nbs_path"), Path.cwd())

test_eq(cfg.path("doc_path"), Path.cwd().parent/'docs')

test_eq(cfg.custom_sidebar, 'False')

test_eq(cfg.recursive, 'False')

A jupyter notebook is a json file behind the scenes. We can just read it with the json module, which will return a nested dictionary of dictionaries/lists of dictionaries, but there are some small differences between reading the json and using the tools from nbformat so we'll use this one.

fname can be a string or a pathlib object.

test_nb = read_nb('00_export.ipynb')

The root has four keys: cells contains the cells of the notebook, metadata some stuff around the version of python used to execute the notebook, nbformat and nbformat_minor the version of nbformat.

test_nb.keys()

test_nb['metadata']

f"{test_nb['nbformat']}.{test_nb['nbformat_minor']}"

The cells key then contains a list of cells. Each one is a new dictionary that contains entries like the type (code or markdown), the source (what is written in the cell) and the output (for code cells).

test_nb['cells'][0]

The following functions are used to catch the flags used in the code cells.

pat can be a string or a compiled regex. If code_only=True, this function ignores non-code cells, such as markdown.

cell = test_nb['cells'][1].copy()

assert check_re(cell, '#|export') is not None

assert check_re(cell, re.compile('#|export')) is not None

assert check_re(cell, '# bla') is None

cell['cell_type'] = 'markdown'

assert check_re(cell, '#|export') is None

assert check_re(cell, '#|export', code_only=False) is not None

cell = test_nb['cells'][0].copy()

cell['source'] = "a b c"

assert check_re(cell, 'a') is not None

assert check_re(cell, 'd') is None

# show that searching with patterns ['d','b','a'] will match 'b'

# i.e. 'd' is not found and we don't search for 'a'

assert check_re_multi(cell, ['d','b','a']).span() == (2,3)

This function returns a regex object that can be used to find nbdev flags in multiline text

bodyregex fragment to match one or more flags,n_paramsnumber of flag parameters to match and catch (-1 for any number of params;(0,1)for 0 for 1 params),commentexplains what the compiled regex should do.

is_export returns;

- a tuple of ("module name", "external boolean" (

Falsefor an internal export)) ifcellis to be exported or Noneifcellwill not be exported.

The cells to export are marked with #export/#exporti/#exports, potentially with a module name where we want it exported. The default module is given in a cell of the form #default_exp bla inside the notebook (usually at the top), though in this function, it needs the be passed (the final script will read the whole notebook to find it).

- a cell marked with

#export/#exporti/#exportswill be exported to the default module - an exported cell marked with

special.moduleappended will be exported inspecial.module(located inlib_name/special/module.py) - a cell marked with

#exportwill have its signature added to the documentation - a cell marked with

#exportswill additionally have its source code added to the documentation - a cell marked with

#exportiwill not show up in the documentation, and will also not be added to__all__.

cell = test_nb['cells'][1].copy()

test_eq(is_export(cell, 'export'), ('export', True))

cell['source'] = "# exports"

test_eq(is_export(cell, 'export'), ('export', True))

cell['source'] = "# exporti"

test_eq(is_export(cell, 'export'), ('export', False))

cell['source'] = "# export mod"

test_eq(is_export(cell, 'export'), ('mod', True))

Stops at the first cell containing # default_exp (if there are several) and returns the value behind. Returns None if there are no cell with that code.

test_eq(find_default_export(test_nb['cells']), 'export')

assert find_default_export(test_nb['cells'][2:]) is None

The following functions make a list of everything that is exported to prepare a proper __all__ for our exported module.

tst = _re_patch_func.search("""

@patch

@log_args(a=1)

def func(obj:Class):""")

tst, tst.groups()

This function only picks the zero-indented objects on the left side of an =, functions or classes (we don't want the class methods for instance) and excludes private names (that begin with _) but no dunder names. It only returns func and class names (not the objects) when func_only=True.

To work properly with fastai added python functionality, this function ignores function decorated with @typedispatch (since they are defined multiple times) and unwraps properly functions decorated with @patch.

test_eq(export_names("def my_func(x):\n pass\nclass MyClass():"), ["my_func", "MyClass"])

Sometimes objects are not picked to be automatically added to the __all__ of the module so you will need to add them manually. To do so, create an exported cell with the following code _all_ = ["name", "name2"]

If you need a from __future__ import in your library, you can export your cell with special comments:

#|export

from __future__ import annotations

class ...

Notice that #export is after the __future__ import. Because __future__ imports must occur at the beginning of the file, nbdev allows __future__ imports in the flags section of a cell.

def _run_from_future_import_test():

fname = 'test_from_future_import.txt'

with open(fname, 'w', encoding='utf8') as f: f.write(txt)

actual_code=_from_future_import(fname, flags, code, {})

test_eq(expected_code, actual_code)

with open(fname, 'r', encoding='utf8') as f: test_eq(f.read(), txt)

actual_code=_from_future_import(fname, flags, code)

test_eq(expected_code, actual_code)

with open(fname, 'r', encoding='utf8') as f: test_eq(f.read(), expected_txt)

os.remove(fname)

_run_from_future_import_test()

flags="""from __future__ import annotations

from __future__ import generator_stop

#export"""

code = ""

expected_code = ""

fname = 'test_from_future_import.txt'

_run_from_future_import_test()

When we say from

from lib_name.module.submodule import bla

in a notebook, it needs to be converted to something like

from .module.submodule import blaor

from .submodule import bla

depending on where we are. This function deals with those imports renaming.

Note that import of the form

import lib_name.module

are left as is as the syntax import module does not work for relative imports.

test_eq(relative_import('nbdev.core', Path.cwd()/'nbdev'/'data.py'), '.core')

test_eq(relative_import('nbdev.core', Path('nbdev')/'vision'/'data.py'), '..core')

test_eq(relative_import('nbdev.vision.transform', Path('nbdev')/'vision'/'data.py'), '.transform')

test_eq(relative_import('nbdev.notebook.core', Path('nbdev')/'data'/'external.py'), '..notebook.core')

test_eq(relative_import('nbdev.vision', Path('nbdev')/'vision'/'learner.py'), '.')

To be able to build back a correspondence between functions and the notebooks they are defined in, we need to store an index. It's done in the private module _nbdev inside your library, and the following function are used to define it.

return_type tells us if the tuple returned will contain lists of lines or strings with line breaks.

We treat the first comment line as a flag

def _test_split_flags_and_code(expected_flags, expected_code):

cell = nbformat.v4.new_code_cell('\n'.join(expected_flags + expected_code))

test_eq((expected_flags, expected_code), split_flags_and_code(cell))

expected=('\n'.join(expected_flags), '\n'.join(expected_code))

test_eq(expected, split_flags_and_code(cell, str))

_test_split_flags_and_code([

'#export'],

['# TODO: write this function',

'def func(x): pass'])

A new module filename is created each time a notebook has a cell marked with #default_exp. In your collection of notebooks, you should only have one notebook that creates a given module since they are re-created each time you do a library build (to ensure the library is clean). Note that any file you create manually will never be overwritten (unless it has the same name as one of the modules defined in a #default_exp cell) so you are responsible to clean up those yourself.

fname is the notebook that contained the #default_exp cell.

Create module files for all #default_export flags found in files and return a list containing the names of modules created.

Note: The number if modules returned will be less that the number of files passed in if files do not #default_export.

By creating all module files before calling _notebook2script, the order of execution no longer matters - so you can now export to a notebook that is run "later".

You might still have problems when

- converting a subset of notebooks or

- exporting to a module that does not have a

#default_exportyet

in which case _notebook2script will print warnings like;

Warning: Exporting to "core.py" but this module is not part of this buildIf you see a warning like this

- and the module file (e.g. "core.py") does not exist, you'll see a

FileNotFoundError - if the module file exists, the exported cell will be written - even if the exported cell is already in the module file

with tempfile.TemporaryDirectory() as d:

os.makedirs(Path(d)/'a', exist_ok=True)

(Path(d)/'a'/'f.py').touch()

os.makedirs(Path(d)/'a/b', exist_ok=True)

(Path(d)/'a'/'b'/'f.py').touch()

add_init(d)

assert not (Path(d)/'__init__.py').exists()

for e in [Path(d)/'a', Path(d)/'a/b']:

assert (e/'__init__.py').exists()

Ignores hidden directories and filenames starting with _. If argument recursive is not set to True or False, this value is retreived from settings.ini with a default of False.

assert not nbglob().filter(lambda x: '.ipynb_checkpoints' in str(x))

Optionally you can pass a config_key to dictate which directory you are pointing to. By default it's nbs_path as without any parameters passed in, it will check for notebooks. To have it instead find library files simply pass in lib_path instead.

get_config().pathFinds cells starting with #export and puts them into the appropriate module. If fname is not specified, this will convert all notebook not beginning with an underscore in the nb_folder defined in setting.ini. Otherwise fname can be a single filename or a glob expression.

silent makes the command not print any statement and to_dict is used internally to convert the library to a dictionary.